👋 About Me

🔥 News

- 2026.01: 🎉 Two papers SvS and RLVR Incentivizes Correct Reasoning have been accepted by ICLR 2026.

- 2025.09: 🎉 Our paper SwS has been accepted by Neurips 2025.

- 2025.09: 🎉 Our paper GuiLoMo has been accepted by EMNLP 2025.

- 2025.09: 🎓️ Start my PhD research journey at UCLA.

- 2025.05: 🎓 Graduated from my Master’s program at Tsinghua University. Thanks to all my advisors and friends!

📖 Education

- Sep. 2025 - Jun. 2029 (Expected) Ph.D., Statistics and Data Science, University of California, Los Angeles, USA

- Aug. 2022 - Jun. 2025 M.Sc., Data Science and Information Technology, Tsinghua University, Beijing, China.

- Sep. 2018 - Jun. 2022 B.Sc., Electronic Information Science and Technology, Sun Yat-sen University, Guangzhou, China.

📑 Selected Publications

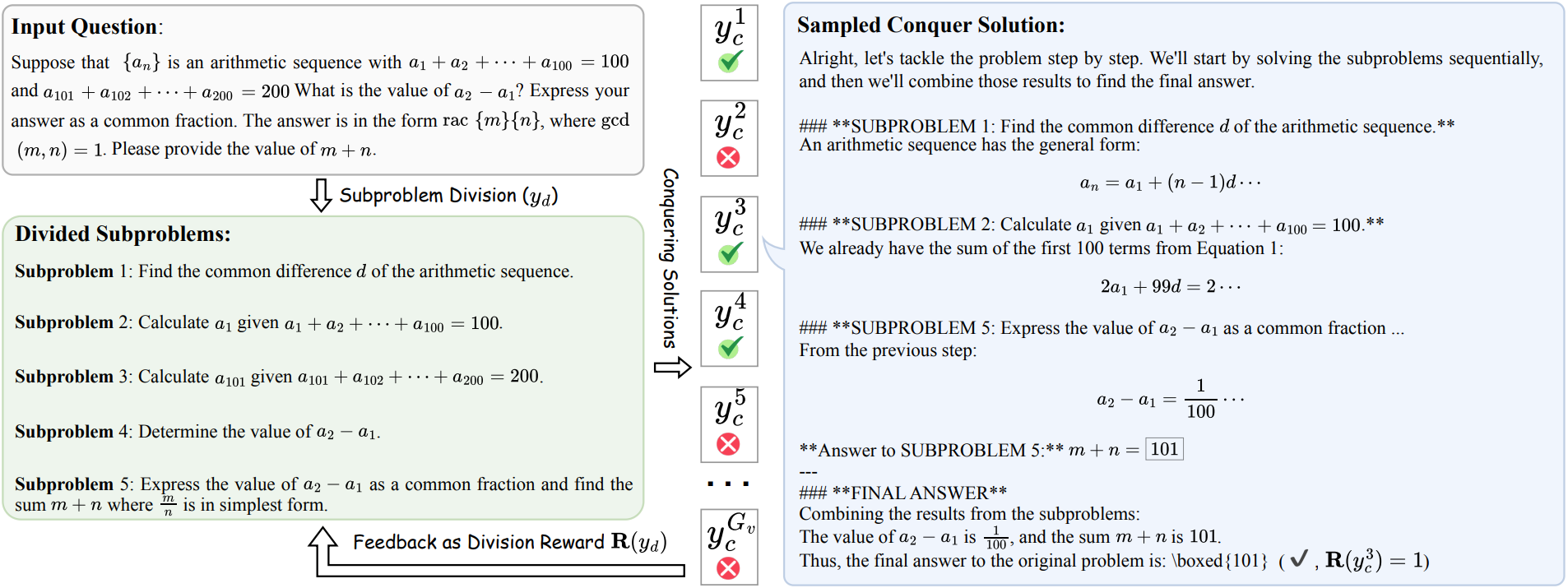

Training LLMs for Divide-and-Conquer Reasoning Elevates Test-Time Scalability

Xiao Liang, Zhong-Zhi Li, Zhenghao Lin, Eric Hancheng Jiang, Hengyuan Zhang, Yelong Shen, Kai-Wei Chang, Ying Nian Wu, Yeyun Gong, Weizhu Chen

We introduce an end-to-end RL framework to endow LLMs with divide-and-conquer reasoning capabilities, enabling a higher performance ceiling and stronger test-time scalability.

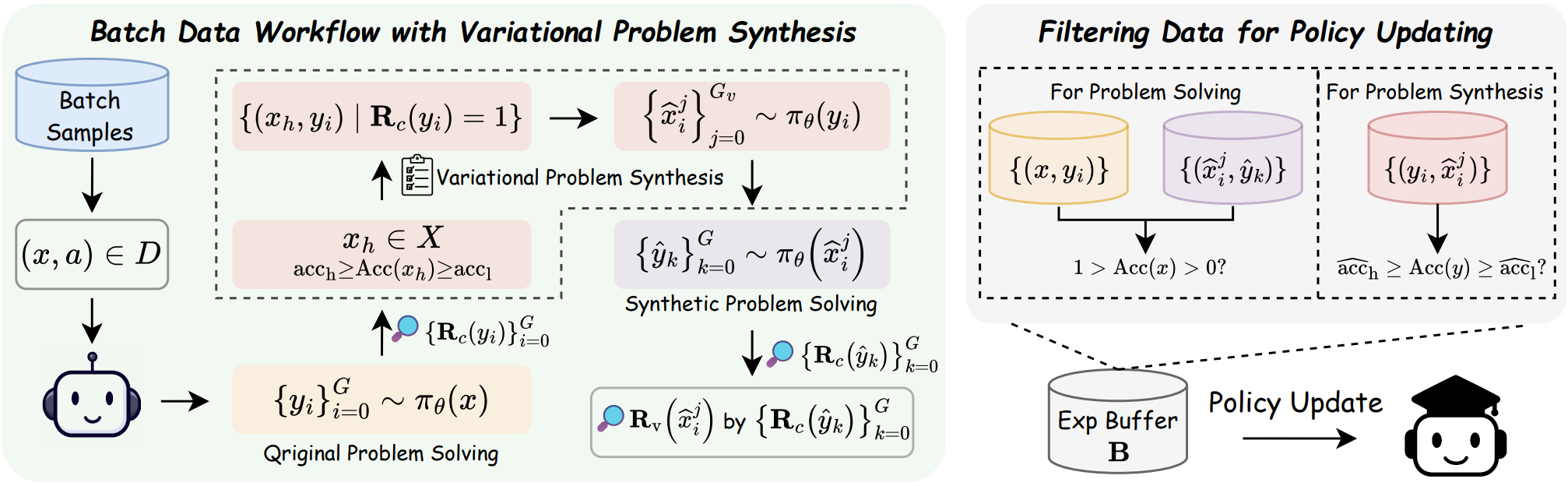

Beyond Pass@1: Self-Play With Variational Problem Synthesis Sustains RLVR

Xiao Liang*, Zhong-Zhi Li*, Yeyun Gong, Yelong Shen, Ying Nian Wu, Zhijiang Guo, Weizhu Chen

We propose an online Self-play with Variational Problem Synthesis strategy for RLVR training that iteratively leverages model responses to synthesize variational problems for augmentation.

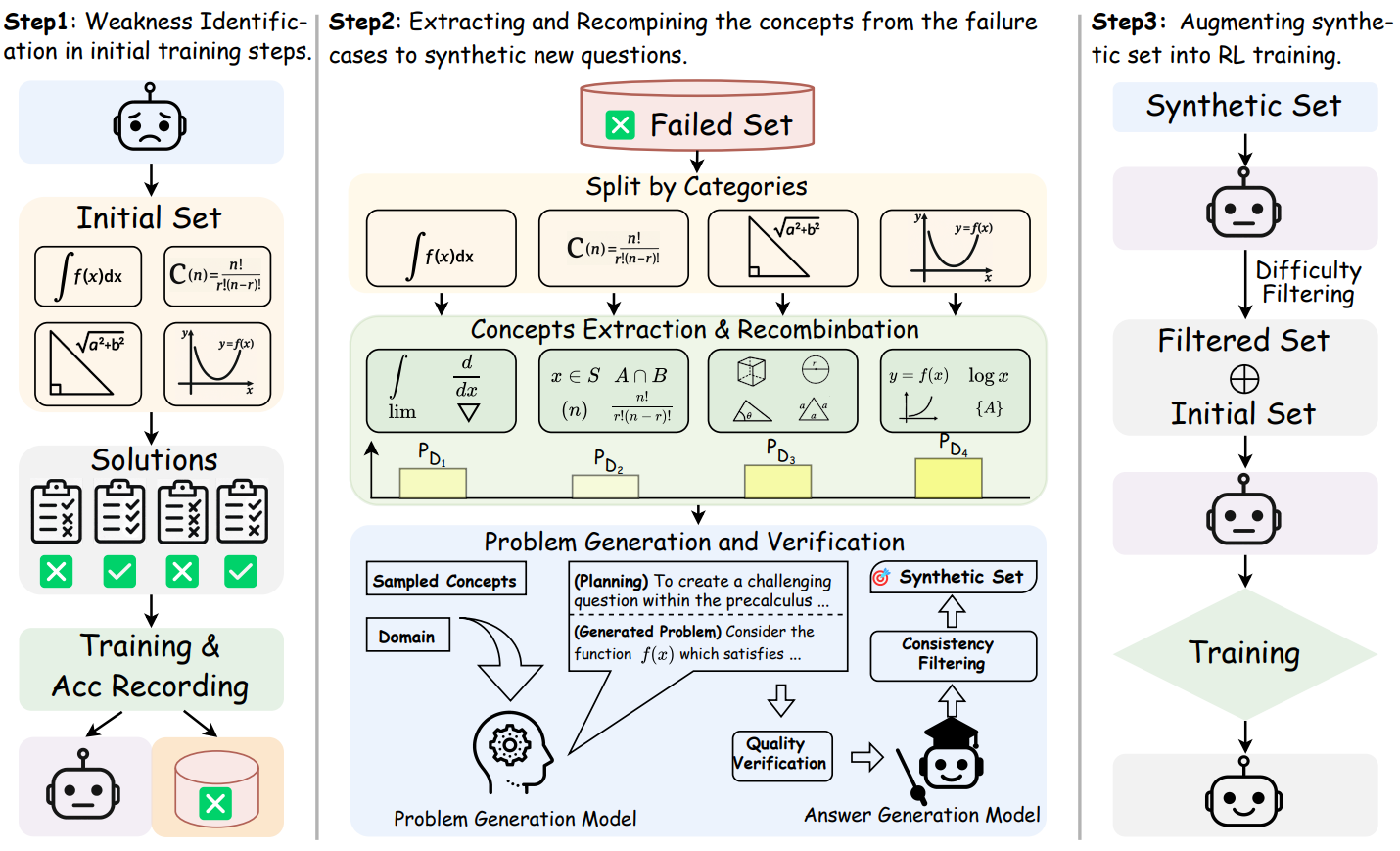

SwS: Self-aware Weakness-driven Problem Synthesis in Reinforcement Learning for LLM Reasoning

Xiao Liang*, Zhong-Zhi Li*, Yeyun Gong, Yang Wang, Hengyuan Zhang, Yelong Shen, Ying Nian Wu, Weizhu Chen

We introduce a Self-aware Weakness-driven Problem Synthesis framework that identifies and leverages model weaknesses for problem augmentation in RLVR.

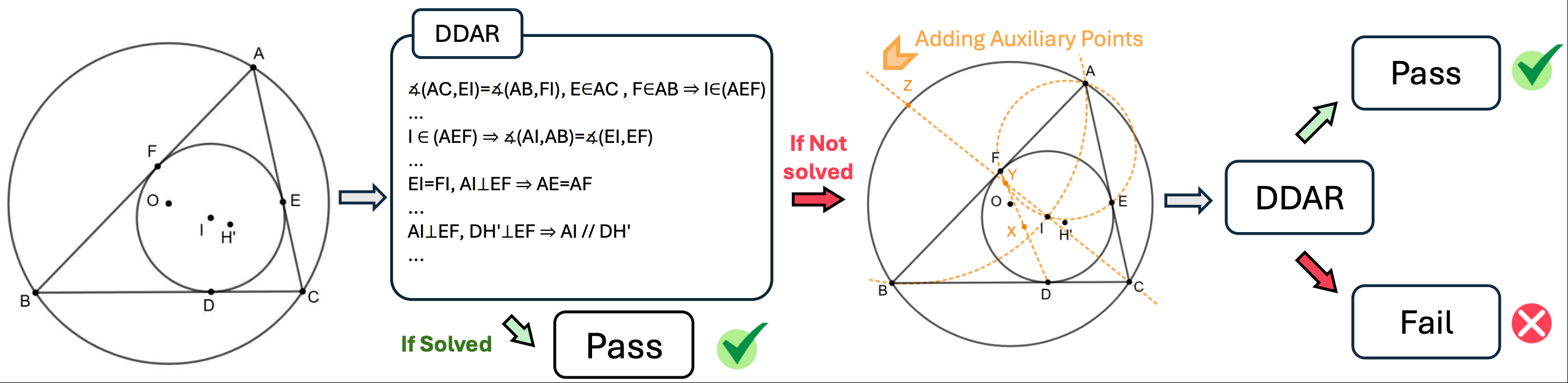

Gold-Medal-Level Olympiad Geometry Solving with Efficient Heuristic Auxiliary Constructions

Boyan Duan, Xiao Liang, Shuai Lu, Yaoxiang Wang, Yelong Shen, Kai-Wei Chang, Ying Nian Wu, Mao Yang, Weizhu Chen, Yeyun Gong

We present a highly efficient geometry theorem proving method that runs entirely on CPUs and achieves gold-medal-level performance on IMO problems.

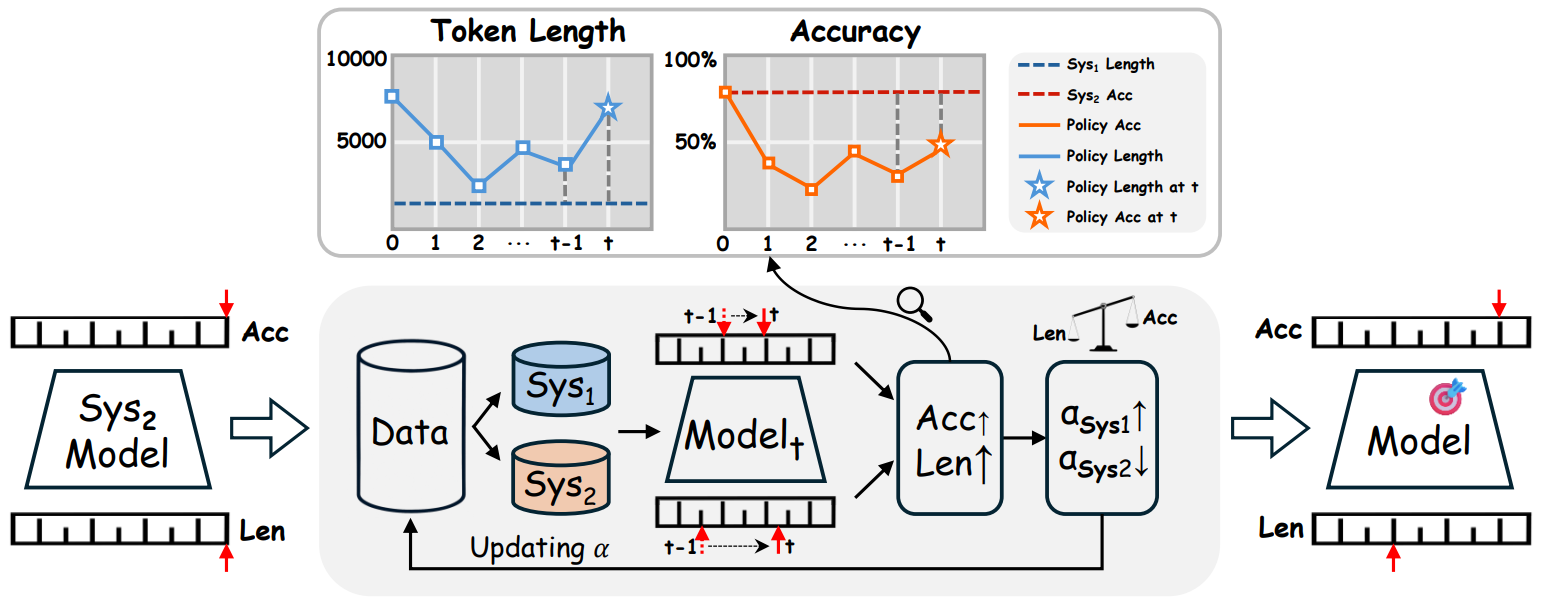

TL;DR: Too Long, Do Re-weighting for Efficient LLM Reasoning Compression

Zhong-Zhi Li*, Xiao Liang*, Zihao Tang, Lei Ji, Peijie Wang, Haotian Xu, Xing W, Haizhen Huang, Weiwei Deng, Yeyun Gong, Ying Nian Wu, Zhijiang Guo, Xiao Liu, Fei Yin, Cheng-Lin Liu

We propose a dynamic ratio-based training pipeline that balances System-1 and System-2 data to reduce redundant reasoning in LLMs.

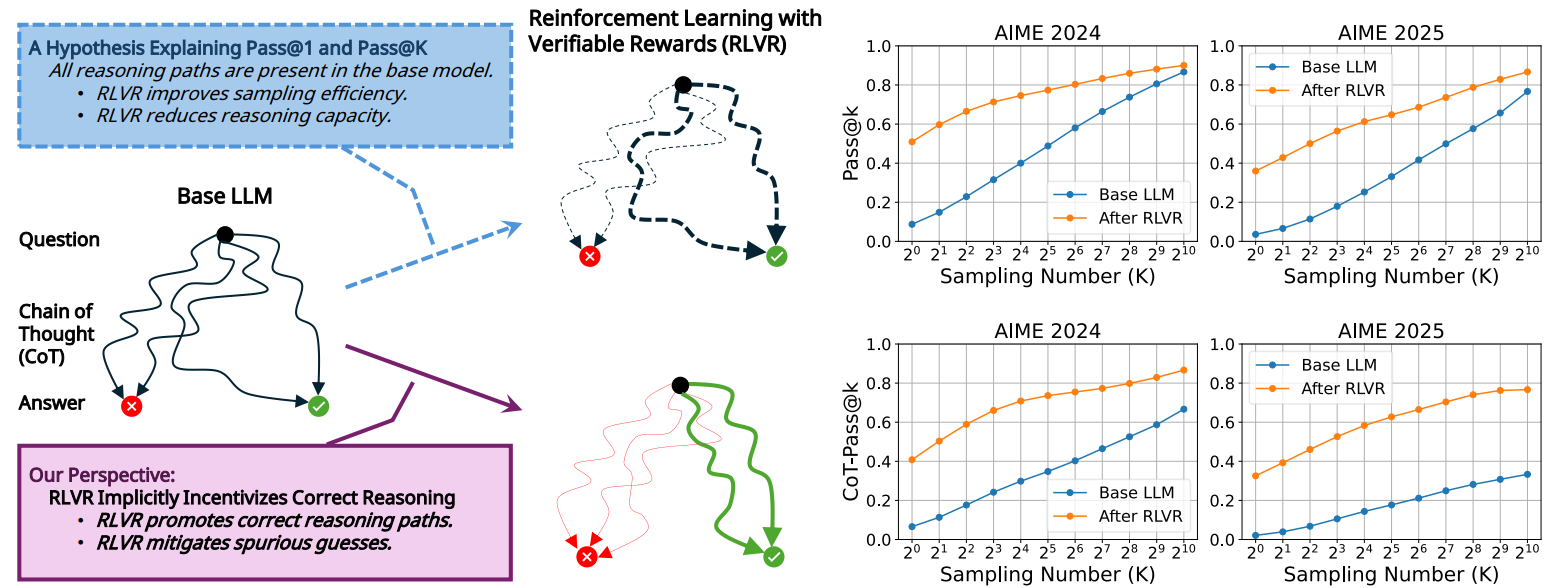

Xumeng Wen*, Zihan Liu*, Shun Zheng*, Zhijian Xu, Shengyu Ye, Zhirong Wu, Xiao Liang, Yang Wang, Junjie Li, Ziming Miao, Jiang Bian, Mao Yang

We introduce a stricter reasoning-aware metric beyond pass@k and show that RLVR uniquely incentivizes logically consistent reasoning.

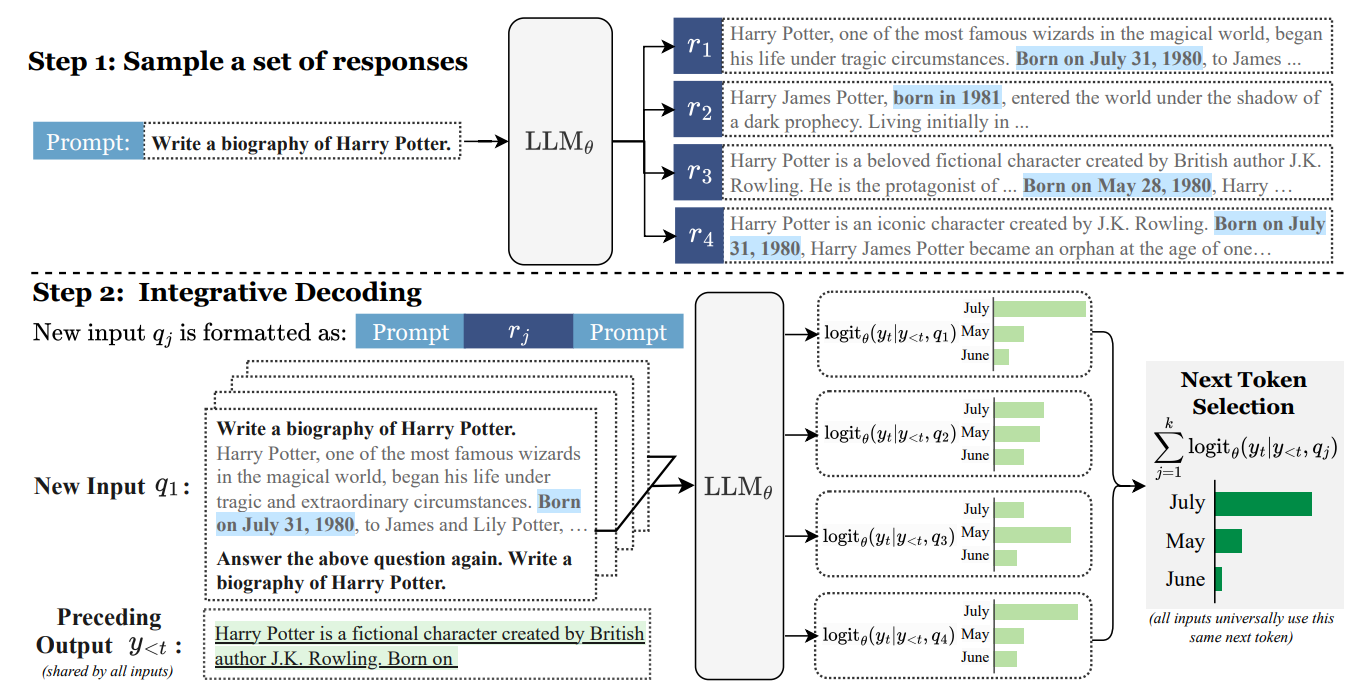

Integrative Decoding: Improve Factuality via Implicit Self-consistency

Yi Cheng, Xiao Liang, Yeyun Gong, Wen Xiao, Song Wang, Yuji Zhang, Wenjun Hou, Kaishuai Xu, Wenge Liu, Wenjie Li, Jian Jiao, Qi Chen, Peng Cheng, Wayne Xiong

We present a self-consistency based decoding strategy that improves factual accuracy in long-form generation.

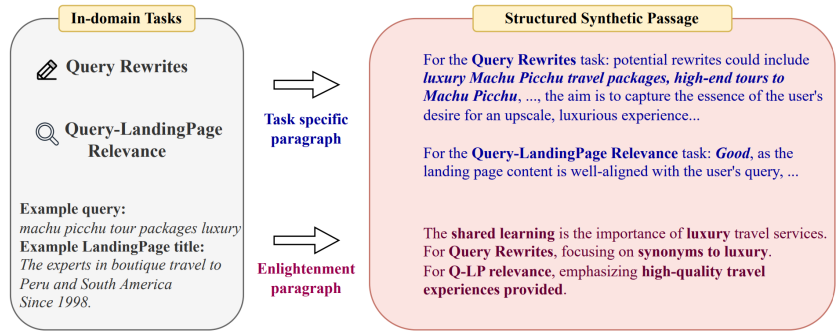

Task Oriented In-Domain Data Augmentation

Xiao Liang*, Xinyu Hu*, Simiao Zuo, Yeyun Gong, Qiang Lou, Yi Liu, Shao-Lun Huang, Jian Jiao

We propose a task-oriented in-domain data augmentation framework consisting of in-domain data selection and task-specific synthesis.

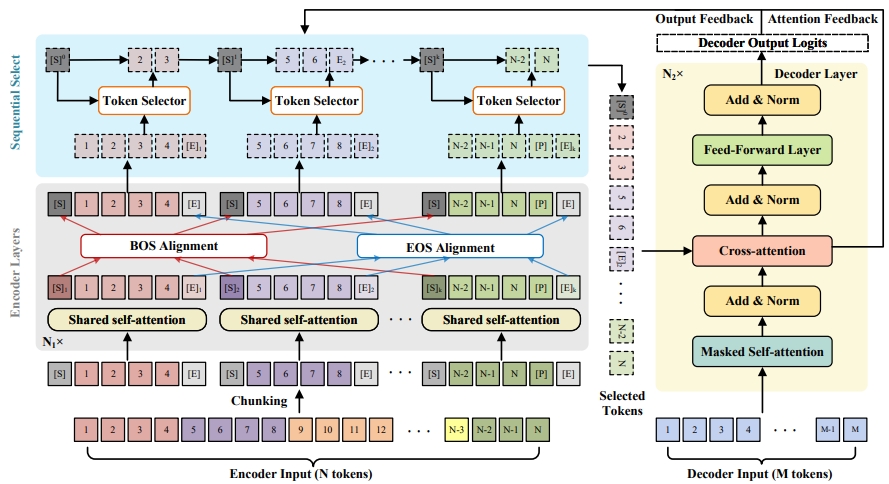

Chunk, Align, Select: A Simple Long-sequence Processing Method for Transformers

Jiawen Xie, Pengyu Cheng, Xiao Liang, Yong Dai, Nan Du

We propose a reinforcement-learning-based token selection framework for efficient long-sequence processing.

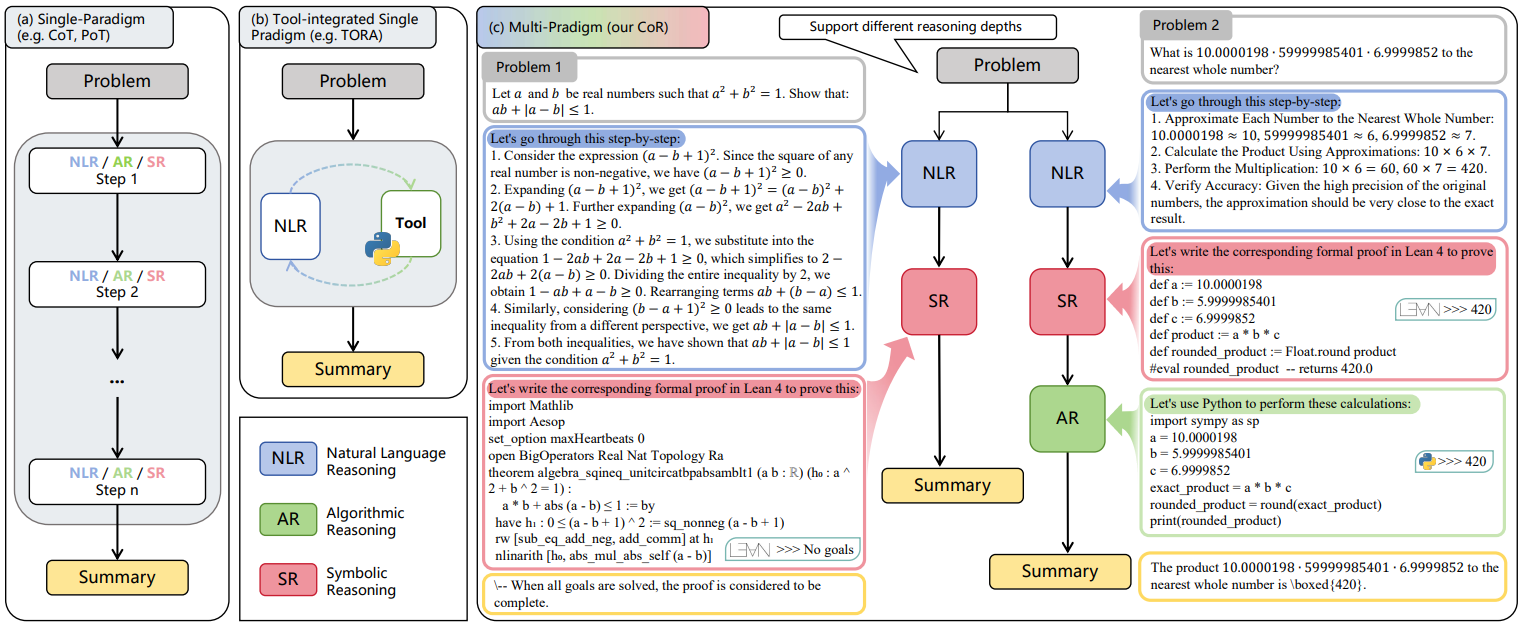

Yiyao Yu, Yuxiang Zhang, Dongdong Zhang, Xiao Liang, Hengyuan Zhang, Xingxing Zhang, Ziyi Yang, Mahmoud Khademi, Hany Awadalla, Junjie Wang, Yujiu Yang, Furu Wei

We introduce a unified framework that integrates natural language, algorithmic, and symbolic reasoning for mathematical problem solving.

🧑💻 Experience

-

(Nov. 2023 - Aug. 2025) Reserch Intern, Microsoft Research & CoreAI.

Mentor: Yeyun Gong, Yelong Shen, Weizhu Chen

Working on LLM reasoning, pre-training and reinforcement learning. -

(Mar. 2023 - Sep. 2023) Research Intern, AI Lab, Tencent Inc., Guangdong, China.

Mentor: Pengyu Cheng

Working on large language models, long sequence processing.

🏆 Honors and Awards

- Graduate Dean’s Scholar Award (GDSA, Amount: $14,500), UCLA, California, 2025

- Outstanding Master Graduate Thesis, Tsinghua University, Beijing, 2025

- Second Prize Scholarship, Tsinhua University, Beijing, 2024

- Outstanding Graduate Thesis, Sun Yat-sen University, Guangdong, 2022

- Outstanding Graduate Student, Sun Yat-sen University, Guangdong, 2022

- Second Prize Scholarship, Sun Yat-sen University, Guangdong, 2019~2022

- 1st of the 2022 Tsinghua Open Hack Competition - Multimodal Learning Track, Tsinghua University, Beijing, 2022